Programming languages

Interpreted vs compiled languages

- Interpreted: an interpreter is used to directly execute source code at runtime, as opposed to having been compiled to machine code beforehand. At runtime, the interpreter translates and runs each line, one at a time.

- Compiled: the language is first compiled into machine code using a compiler before the program is run, and then the machine code is executed on the CPU when the program is run

Dynamic vs statically typed languages

Difference primarily lies in the times are which types are checked for correctness

- Static: Perform type checking at compile time as opposed to runtime

- Dynamic: Perform type checking at runtime

Concurrency, Multi-threading, Async

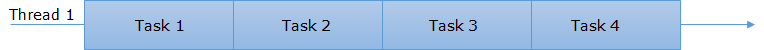

Synchronous, Single Thread

The standard programming model is as synchronous one: a thread (set of instructions that can executed independently of other code, the basic unit CPU utilization) is assigned to one task, and the next task must wait until the current task is completed before starting.

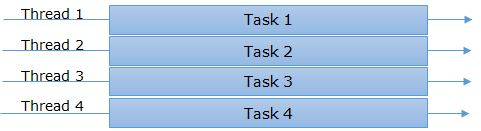

Synchronous, Multi-threaded

In this scenario we have multiple threads that can each take on a task and work on it independently of the tasks in other threads. These are commonly referred to as a pool of threads.

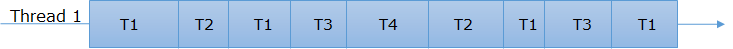

Asynchronous, Single Thread

In contrast to synchronous programming, a (single) thread can start executing a task, pause its work on it, and move on to another task. Such a pause will usually occur where this is a moment the computer can wait for another external process to finish, and to sit around and do anything wastes time. Essentially multiple tasks are weaved together on a single thread, switching between tasks at logical break/wait points.

Asynchronous, Multi-threaded

This is a simple extension of asynchronous model. With multiple threads available, each can run tasks in an asynchronous fashion.

Concurrency

Concurrency is a term that refers to processing multiple requests at a time. This can happen in a synchronous multi-threaded environment, or an asynchronous one (single or multi-threaded) when it comes to programming environments.

On a lower level, you can have concurrency on single or multi core machines. With multiple cores (ie multiple CPUs), you can have true concurrency, physically executing mutliple tasks at the same time. On a single core, the CPU can only physically execute one process at a time. However, it employs context switching to quickly switch between processes to give the appearence of true concurrency. Note that this doesn’t mean that any time is saved; running the processes in a synchronous fashion would take an equal amount of time. However, “processes” are typically made up of smaller sets of instructions, and executing these instructions can make multiple processes appear to concurrently running.

Multiprocessing

Multiprocessing refers the use of multiple processes (instead of threads) to execute tasks at the same time.

Core vs Thread vs Process

Both processes and threads are independent sequences of execution. The primary difference is that threads run in a shared memory space, whereas processes run in separate memory spaces. The process is essentially just a program being executed by the processor somewhere in memory. A process can have multiple threads.

A core is a single computing component with an independent CPU. Core=CPU=processor. It can only process one single thing at any point in time. However, when it is said that a single core can run multiple threads it is simply referring the idea of these threads being executed in an interleaved fashion, similar to the asynchronous model. You can have multiple cores, each running multiple threads.